Runway released a new artificial intelligence (AI) video generation model on Friday that can edit elements in other input videos. Dubbed Aleph (the first letter of the Hebrew alphabet), the video-to-video AI model can perform a wide range of edits on the input, including adding, removing, and transforming objects; adding new angles and next frames; changing the environment, seasons, and the time of the day; and transforming the style. The New York City-based AI firm said that the video model will soon be rolled out to its enterprise and creative customers and the platform’s users.

Runway’s Aleph AI Model Can Edit Videos

AI video generation models have come a long way. We have moved from generating a couple of seconds of an animated scene to generating a full-fledged video with narrative and even audio. Runway has been at the forefront of this innovation with its video generation tools, which are now being used by production houses such as Netflix, Amazon, and Walt Disney.

Now, the AI company has released a new model called Aleph, which can edit input videos. It is a video-to-video model that can manipulate and generate a wide range of elements. In a post on X (formerly known as Twitter), the company called it a state-of-the-art (SOTA) in-context video model that can transform an input video with simple descriptive prompts.

Introducing Runway Aleph, a new way to edit, transform and generate video.

Aleph is a state-of-the-art in-context video model, setting a new frontier for multi-task visual generation, with the ability to perform a wide range of edits on an input video such as adding, removing… pic.twitter.com/zGdWYedMqM

— Runway (@runwayml) July 25, 2025

In a blog post, the AI firm also showcased some of the capabilities Aleph will offer when it becomes available. Runway has said that the model will first be provided to its enterprise and creative customers, and then, in the coming weeks, it will be released broadly to all its users. However, the phrasing does not clarify whether users on the free tier will also get access to the model, or if it will be reserved for the paid subscribers.

Coming to its capabilities, Aleph can take an input video and generate new angles and views of the same scene. Users can request a reverse low shot, an extreme close-up from the right side, or a wide shot of the entire stage. It is also capable of using the input video as a reference and generating the next frames of the video based on prompts.

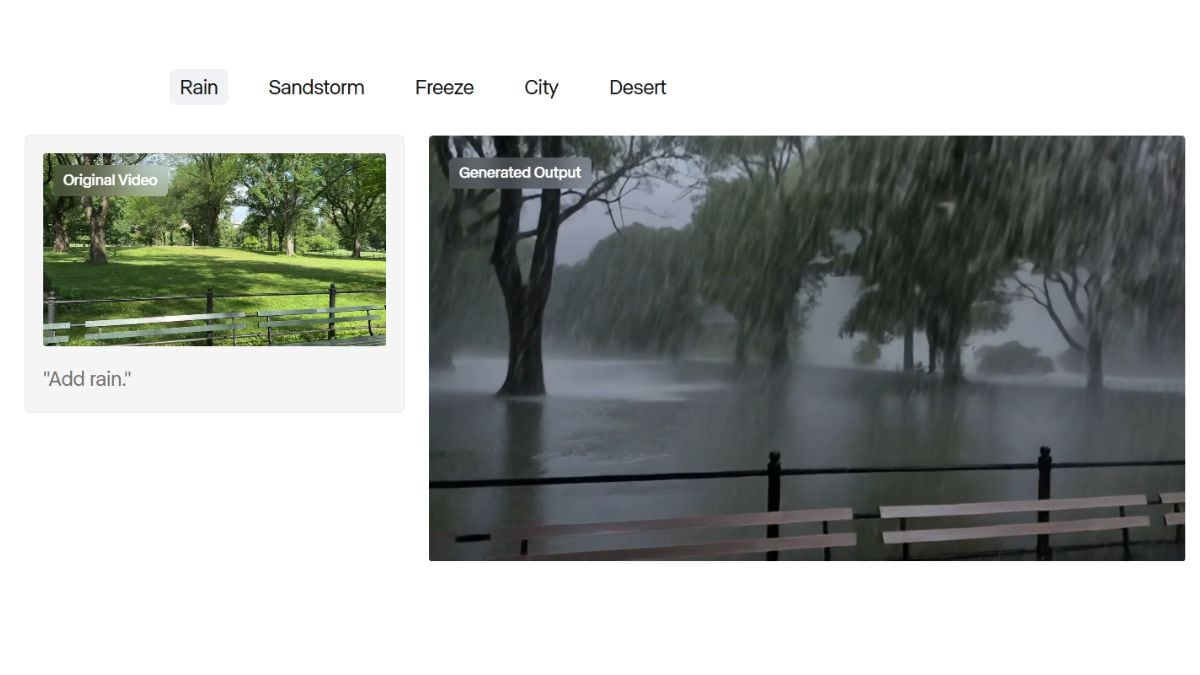

One of the most impressive abilities of the AI video model is to transform environments, locations, seasons, and the time of day. This means users can upload a video of a park on a sunny morning, and the AI model can add rain, sandstorm, snowfall effects, or even make it look like the night-time. These changes are made while keeping all the other elements of the video as they are.

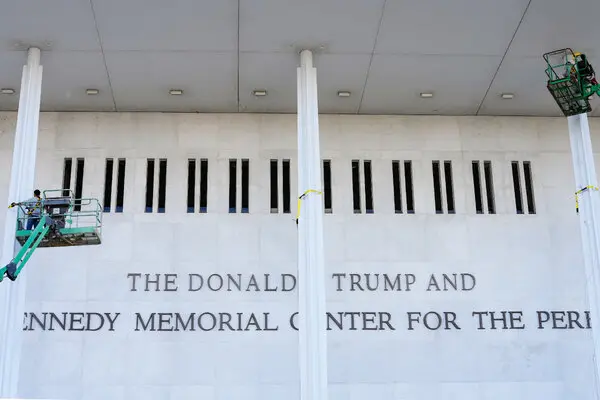

Aleph can also add objects to the video, remove things like reflections and buildings, change objects and materials entirely, change the appearance of a character, and recolour objects. Additionally, Runway claims the AI model can also take a particular motion of a video (think a flying drone sequence) and apply it to a different setting.

Currently, Runway has not shared any technical details about the AI model, such as the supported length of the input video, supported aspect ratios, application programming interface (API) charges, etc. These will likely be shared when the company officially releases the model.